I made a renderer of sorts using ICE.

I was preparing some compounds to do world-to-screenspace stuff in ICE when I thought I’d give this a try. I wanted to see if I could use a screenspace grid of particles, set to fit in the camera frustum, to shoot rays and gather info from geometry in the scene in a view-centric way.

It was so easy that I extended the idea to see if I could render an image, through ICE, from data gathered from the scene like a raytrace renderer would.

It’s not a raytracer implemented in ICE maths: it uses the factory Raycast node to do all the geo-ray interesections. I was more interested in seeing if I could use the raycast pointlocators to do some classic 3d shading stuff.

My approach was to generate a grid of points in the space of the camera’s view, shoot rays out against the geometry in the scene, store some basic render-state variables to the cloud, and then use the state variables to colour the points as if they were fully shaded samples.

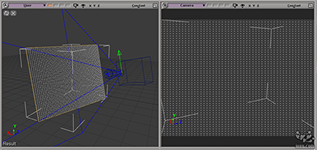

1 - making a screen-space cloud grid

I used a Model > Get > Primitive > Point Cloud > Grid to make a grid of points.

Some trig on the camera’s fov, and a matrix mult by the camera’s global transform then transformed the grid into the perspective-space of the camera.

I added controls for dealing with grid subdivisions and camera aspect to keep the grid fitting the frustum perfectly, and a control for defining the depth from the camera of the screen-space cloud.

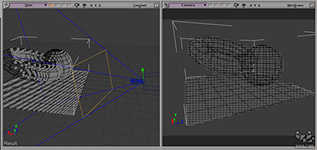

2 - using the grid to raycast into the scene

Now that I had the world-space points fitting to the camera, I could cast rays against a geometry group to gather data and store variables.

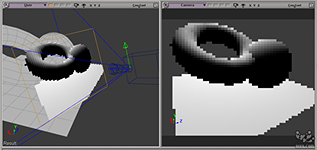

I stored the intersected location (world-space, stored as gatherP) and the geometry normal at the intersection (world-space, stored as gatherN). I could then show the particles projected onto the scene.

I made a switch so that it wasn’t necessary to have the points projected out onto the scene for the system to work; I could also leave them at the camera-driven screen plane.

Having gatherP stored, it was then easy to make the first bit of shading. Simple depth from camera: (the points here are sitting on the screen plane)

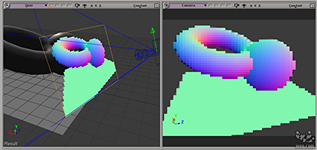

Following naturally was camera-space normals as a function of gatherN and the camera’s orientation:

I made controls for making sure that particles’ Scales and Sizes (with the Rectangle particle Shape) were updated as a function of cloud grid subdivisions, camera fov, camera aspect and depth from camera. The particles appear to retain their size when viewing through the camera, no matter what their depths are from camera. Good ol’ Tan.

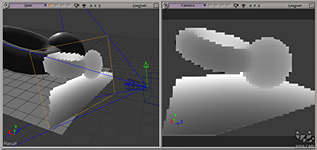

3 - colouring the particles (shading)

3.1 - lighting

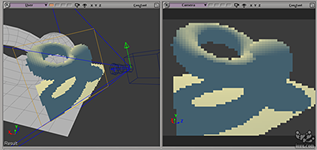

Classic Lambert illumination.

I brought in a null’s transform to pose as a lightsource and to make a light direction (L) vector for gatherP. The dot product between gatherN and L creates the illumination model (Lambert).

Using another Raycast setup, I generated a raytraced shadow from the lightsource (using the lightsource null). Multiplying the light colour and adding ambience completes the basic model.

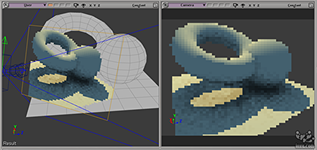

3.2 - ambient occlusion

Using gatherP and gatherN, I used Raycast in a loop to do some ambient occlusion (multiplying the ambient colour before adding to the other lighting). It’s pretty slow but it totally shows the idea.

Here’s a Vimeo of it all in action

(can’t see video? click through..)

download

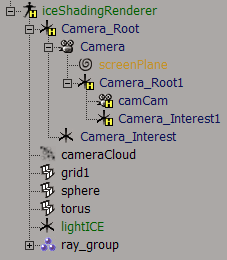

I’ve made a model (.emdl) for download that contains all the ICE trees and shows the system updating. There is a DisplayInfo property on the pointcloud that has some controls expressioned into the ICE trees.

This whole thing was a brief throwdown of an idea, so the ICE trees are only roughly commented, and the maths is pretty loose, but it shows how it works. Probably the most complicated stuff in there are the few matrix-mults I had to use to transform vectors into the camera’s perspective and global transforms.

- look through the camera iceShadingRenderer.Camera.

- important: the system does not update in playback mode. Play with it parked on a single frame.

- the ray_group contains the geometries to trace against (but I had some update trouble when I added or removed objects: I had to create new geometry access nodes and re-hook them up each time).

- move the lightICE null around to see the lighting update.

- ambient occlusion samples are controlled on the DisplayInfo PPG on the particle cloud.

download iceShadingRenderer.zip

(121kb, made with XSI7.01)

please let me know if there’s a problem with the download.

Tags: 3d, ice, particles, realtime, softimage, xsi

Dude! That’s firggin insane! Nice work!

Really nice one !

ICE is so cool to visualize how 3d works. It should be used to learned 3d in every school :).

Thanks for sharing your model !

Hey, no problem Guillaume! I love to share :D

I agree, things like ICE and Processing, etc, have so much amazing potential for making maths, science and art accessible to human beings.

btw: i should implement something like Test D in this post of yours :D

Pretty cool.

Does it have a pixel filter? Ie are neighouring cells averaging thier values together?

No filtering, man.

I had started to think about antialiasing once I’d finished this post, but I actually delete particles really early that don’t intersect with scene geo. I reckon a rehash of this setup could enable supersampling plus sample filtering.

But, you know me .. I’ve lost interest already :D

I just cant say anything.. thats just freackinggggg amazing, I love you!<3 No, really I do! = D Keep it up, I think this is the shit that makes ice so awesome.